Lent my iphone to my sister, nephew threw hers down the toilet, literally... Ouch!

Posts

Showing posts from May, 2009

Data Integrity through DEFINE.XML

- Get link

- Other Apps

You can use the DEFINE.PDF / DEINF.XML files that are created for electronic submission to review your own data. This is usually performed by an independent reviewer outside of the development team. The fresh perspective from the reviewer creates a redundancy that ensures the accuracy and integrity of your data. This would allow you to catch discrepancies that may otherwise be captured during a review from regulatory agencies. There are steps which you can perform to ensure that your domain documentation is accurate and that the data which it is describing is accurate. Step 1: Verify that any hyperlinks such as the one to external transport ( XPT ) files link to the right files. This is to ensure that the domain document itself has accurate hyperlinks. Step 2: At the top list for datasets , verify the key fields. Ensure that the following criteria are met: The key field exists and is listed first in the list of variables. The dataset is sorted by the key fields. Step 3: Verify all d

Validation of the SAS System

- Get link

- Other Apps

Validating a new version of SAS on a production server used to be a daunting task. The SAS System version 9.1.3 and 9.2 ships with user friendly installation qualification tools. This is coupled with existing tools that make it easier to validate SAS . Besides qualifying the installation, there are other tasks and components of the system that need to be validated or verified. Some of these components include: Backward compatibility issues with older versions of datasets and format catalogs. Validating multi use macros and standardized code templates. Verifying stand alone or project specific programming and output. Effects on standard operating procedures and programming practices. The interconnectedness of the SAS computing environment does require considerable efforts in validating a SAS System. However, if this is executed successfully, it can allow for greater traceability between output, programs and source data. The performance qualification also sheds light on ways of opt

Bringing SAS ODS Output to the Web

- Get link

- Other Apps

The SAS ® System gives you the ability to create a wide range of web-ready reports. This paper walks through a series of examples showing what you can do with Base SAS and when you need SAS / IntrNet . Starting with the simplest HTML reports, this paper shows how you can jazz up your output by using the STYLE= options, traffic-lighting and hyper-linking available in the reporting procedures: PRINT, REPORT, and TABULATE. With the use of SAS / IntrNet you can add functionality to reports with features such as drill-down links that are data-driven, and you can produce dynamic reports created on-the-fly for individual users. Using this technique, your clients can navigate to the exact information needed to fulfill your business objective. SAS ODS Overview In the SAS System, both the Output Delivery System (ODS) and SAS / IntrNet produce documents for viewing over the Internet. All SAS users have ODS because it is part of Base SAS , but SAS / IntrNet is a separate product which you m

Effective Ways to Manage Coding Dictionaries

- Get link

- Other Apps

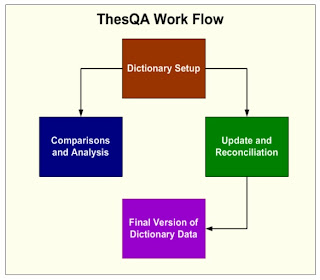

Coding dictionaries such as MedDRA and WHO Drug can be a challenge to manage with new versions and change control . This becomes even more difficult when different collaborators such as CROs deliver coded data with different coding decisions from various dictionaries. Reconciling these differences can prove to be very resource intensive. This paper will address these challenges and suggest techniques and tools which compare and report on the differences among dictionaries. It will demonstrate strategies on reconciling and managing changes for consistent coding of adverse events , concomitant and medical history. Controlled Terminology Overview Coding decisions for adverse events and medications is part science and part art. There is room for interpretation left up to the person deciding on which preferred term or hierarchical System Organ Class (SOC) is associated with the verbatim term. This may differ slightly between projects with different drugs and indications. The difference